The db2pro Appliance is a small pre-configured Ubuntu VM running on Oracle VirtualBox. This appliance has these components:

Collectors:

The Collectors are a series of configurable bash scripts that gather performance data from the target database servers. The Collectors run at different intervals (hourly, daily, weekly) which can be configured based on your requirements.

Transporter:

The Transporter is a configurable bash scripts that facilitates the shipment of data packages to the db2pro engine

Processing Engine / ETL Engine:

The ETL Engine unpacks the data packages and loads them into the Engine Repository.

Replicators for the db2pro Portal:

The Replicators copy the data with additional transformation and summarization to the db2pro portal. This portal runs on WORDPRESS which is the number #1 content management system in the world.

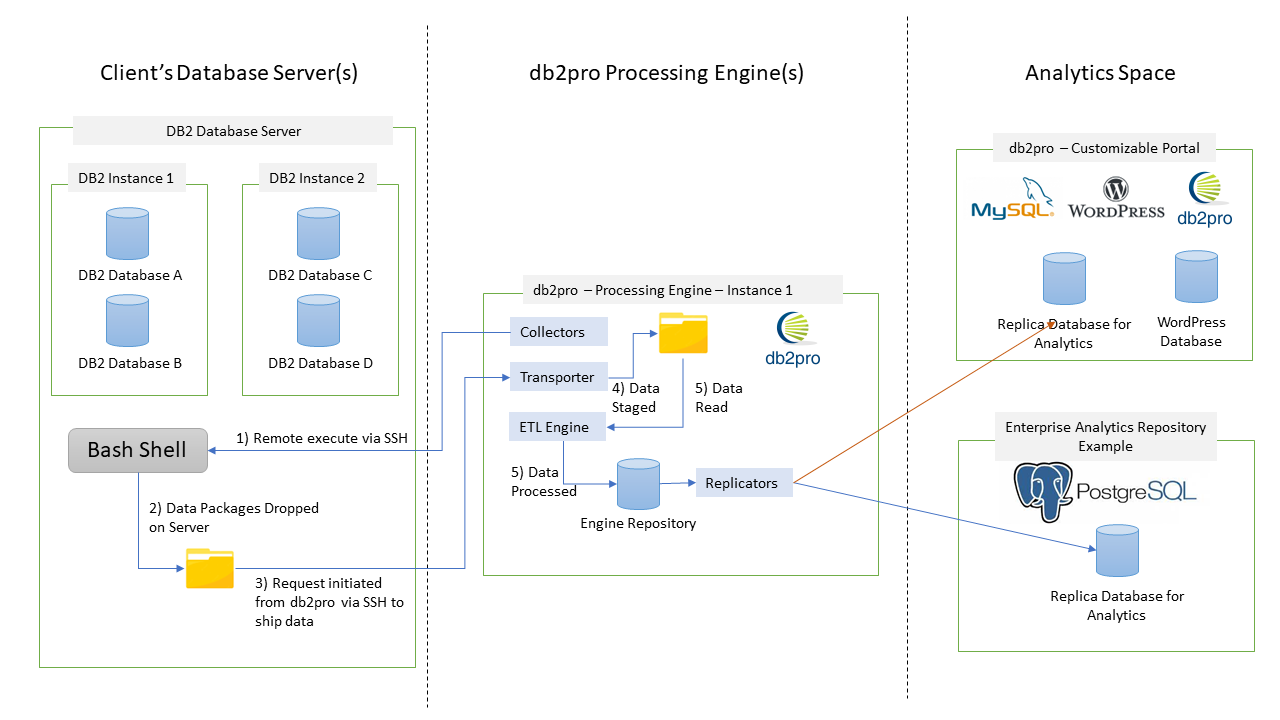

DB2PRO Data Flow

This diagram shows an overall data flow in and out of the db2pro processing engine. The diagram below shows that the Collectors are running via a pre-existing SSH configuration. This is the recommended way to run the Collectors. However, we also supply a more simplified set of collectors that run through the DB2 Client.

- The Collectors send instructions over the ssh channel to the target database server to discover "activated databases", and collect performance data.

- The Collectors create data packages on the target server and drop them in a designated spot.

- The Transporter sends instructions over the ssh channel to the target server to pushes the data packages back to the db2 processing engine.

- The Transporter stages the data package in a designated spot on the db2pro processing engine.

- The ETL engine reads the data packages in a chronological order, unpacks them and process them into the Engine Repository.

Once the data is in the Engine Repository, the Replicators will incrementally push this data to target environments, such as db2pro Portal or any Enterprise Analytics Repository.

Use db2pro for Monitoring and Analytics

The db2pro system is for both monitoring and analytics. While the primarily intent of db2pro is to provide an analytics platform, it can be used as a monitoring platform (via increasing the Collector's frequency) provided that the db2 processing engine(s) have been given enough processing capacity. The db2pro system is horizontally scalable and you can start multiple db2pro processing engines to handle the additional load.

Minimum Hardware Requirements

The db2pro Processing Engine can run on as little as 2 virtual CPUs and 8 GB of RAM.